Killing the Clipboard: Cloud Fax is the Bridge to Patient-Centric Data Access

By Bevey Miner

Bevey Miner is a healthcare strategist at eFax, a Consensus Cloud Solutions brand.

The Trump Administration’s renewed focus on interoperability has reignited the long-standing calls for healthcare to “Kill the Clipboard.” This movement aims to eliminate the administrative burden and data silos that are caused by paper-based processes, allowing for near-instant access to searchable, actionable patient information.

The industry broadly supports modernization efforts, with patient access at the forefront. But we need to ensure that this digital transformation doesn’t leave small, rural, and under-resourced communities behind.

The paper problem: why change takes time

We cannot wait for every provider to achieve a perfect, fully digital state before we start delivering on the promise of interoperability. Patients must have access to their data now, even if parts of the industry are still using clipboards and paper fax.

With the federal initiative to bolster near-instant patient access to their health records, along with real-time patient data accessible for providers to dramatically speed care coordination, paper records that are transmitted over outdated fax machines don’t support and often impede the ability to reach this goal. The administration is leaning heavily on data networks and vendors to streamline the transmission of information between healthcare providers while modernizing standards with FHIR APIs.

Conceptually, the future we are all working towards is faster data access, searchable and actionable information to improve care, and seamless communication between care teams. This idealized future state fails to account for the practical limitations that are facing many foundational healthcare organizations.

Twenty-nine percent of providers report that they lack the financial resources that are needed to deploy the advanced digital infrastructures that are required by today’s interoperability vision.

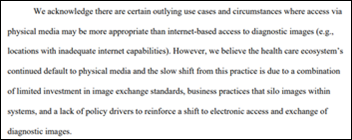

Many organizations, like rural and smaller post-acute care settings, are still playing catch-up since they were excluded from incentives that accompanied the HITECH act of 2009. While some of these organizations may have an EHR, it may be outdated and not certified. Additionally, it’s not uncommon to find others working with scrappier, home-grown solutions, or even resorting to paper-based and manual processes.

But while these smaller organizations might not have million-dollar EHR platforms, they do have paper fax. In order for healthcare organizations of all sizes to participate in the move to “Kill the Clipboard,” they are turning to digital cloud fax.

Cloud fax: healthcare’s guilty pleasure

A recent survey found that 46% of healthcare facilities still use paper fax to send and receive patient data. If the healthcare industry is so dedicated to moving past paper, why do these archaic systems persist?

The simple answer is that, while we are attempting to replace the paper fax machine with a structured data format like FHIR, we still need the next level of communication maturity: cloud fax. Once a fax becomes digital, additional data-sharing capabilities become possible.

Cloud fax offers all the benefits of paper fax and is much more efficient. It is particularly easy to use and can be fully integrated into other applications via APIs. For decades, it has served as the standard method for document and digital data transmission in healthcare because it checks many boxes. It meets HIPAA and HITRUST standards and is universally compatible with other systems that operate in silos.

Simply put, cloud fax is the most common and accessible form of send and receive communication in our industry. Calls to prevent its ubiquitous use demonstrate a fundamental unawareness of current operational realities and the power of digital transformation to modernize and integrate cloud fax, rather than simply eliminate it.

Send, receive, find: AI-powered digital cloud fax goes the extra mile

Digital cloud fax provides robust send and receive capabilities, but to meet the CMS definition of interoperability, “find” is another key component. To find information, the data must be discoverable. New AI capabilities are helping fax go the extra mile, transforming traditionally unstructured, static documents into structured, actionable insights using intelligent data extraction. This is critical to advancing interoperability since as much as 80% of healthcare data remains unstructured.

Innovations in machine learning and LLMs enable unstructured data from digital faxes, scanned images, TIFFs, and other PDFs to be extracted from nearly any type of health document, including intake content, claims, handwritten forms, and more, and place it directly into a structured system like an EHR or a payer workflow. When these AI tools are built on digital cloud fax platforms to start, they are already leveraging a technology that most healthcare organizations have in place. Implementation is significantly easier and less time-consuming than adding an entirely new system to an organization’s already overloaded and fragmented tech stack.

Delivering superior reliability and security, intelligent digital cloud fax acts as a connector between various types of data files and formats, sharing both structured and unstructured data between healthcare organizations that span various levels of digital sophistication.

Time to face the fax

For many healthcare organizations, digital cloud fax isn’t a roadblock, but an accelerator, enabling them to keep up with more tech-savvy counterparts without the heavy investment in rip and replace technology. It also supports the ongoing FHIR mandates and regulatory changes impacting providers at every level.

By recognizing digital cloud fax as a necessary part of day-to-day operations, as it is at most healthcare organizations, we can better understand how this tool can help us reach interoperability faster, while facilitating the digital transformation of as many organizations as possible.

Healthcare’s reliance on digital cloud fax should not be treated as a guilty secret. Instead, it’s an equalizer and an opportunity. Once we realize its full potential, interoperability initiatives will be more achievable and successful than ever.

In fairness to the person on the thread the other day: Now THIS is politics on the blog. :)